Sailing through the Challenges of Artificial Intelligence in Institutional Research

Artificial Intelligence (AI) is revolutionizing many aspects of our world, including institutional research (IR), providing unprecedented capabilities for data analysis, predictive modeling, and decision-making. To remain influential contributors in higher education, IR professionals must actively engage in discussions about AI, demonstrate their expertise, and contextualize AI’s use within their institutions. Understanding AI concepts such as machine learning (ML) and deep learning (DL) as well as articulating the implications of AI for student success, equity, and institutional accountability are essential first steps.

As AI continues to transform higher education, ethical awareness of data use and proactive collaboration will be key to leveraging its potential responsibly. However, alongside its transformative potential lies a complex web of risks and limitations (Zheng &Webber, 2023). This discussion delves into the dangers of AI in steering through the waters of IR, exploring ethical concerns surrounding privacy, consent, and compliance; limitations in addressing data bias and systemic inequities; the lack of cultural and contextual awareness in decision-making; and the risks of over-reliance on AI alongside the growing skill gap among IR professionals. By unpacking these issues, we aim to illuminate the path toward a more balanced and responsible integration of AI in IR practices.

Ethical Drift: Privacy, Consent, and Compliance Risks

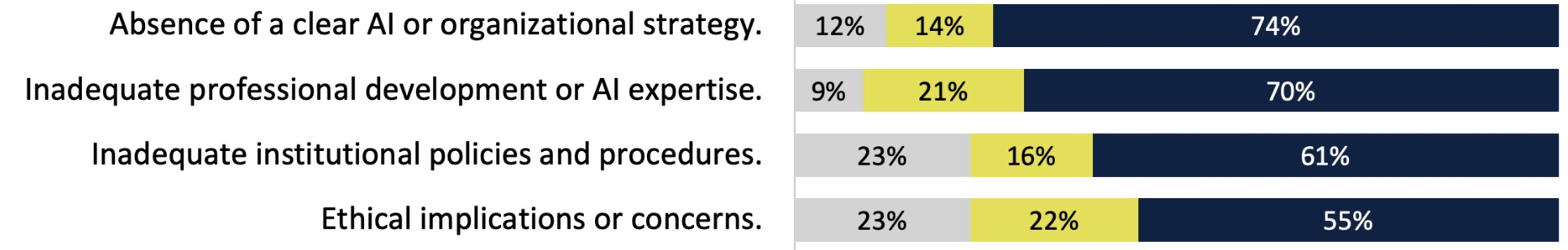

In 2019, the Association for Institutional Research (AIR) launched the AIR Statement of Ethical Principles regarding the use of data, which advocated for the use of accurate information, appropriate research methods, and user privacy protection (AIR, 2019). Further, findings from AIR’s 2023 Survey on The Use of Generative Artificial Intelligence in Institutional Research/Effectiveness reported that ethical concerns and implications rank among the top five factors for the lack of generative AI use in offices with lower generative AI Maturity, as shown in the chart below:

Degree that Items are Reasons for Lack of Generative AI USE

Source: AIR Community Survey (Chart 4) Degree that Items are Reasons for Lack of Generative AI Use (Jones & Ross, 2024).

Among the comments provided by survey respondents, the relevance of “ethical considerations are given social injustices entwined within the world wide web,” as well as [newly created] “guidelines for plagiarism and appropriation” is remarkably noticed (Jones & Ross, 2024, p.6). Another critical statement mentions how “bias is a major concern when using these tools. Generative AI propagates ideals of the larger society which make these tools dangerous as they spread misinformation and inherent bias” (Jones & Ross, 2024). To these concerns, survey authors recommend that IR professionals:

“Be clear who owns the data you put in. Keep the AIR Statement of Ethics in mind when you consider using AI tools. Learn how to prompt [the] AI tool to 'train' it. Verify results with another tool. Apply good research techniques.” (Jones & Ross, 2024, p.6).

Along with potentials for bias, privacy becomes a prevailing concern in the discussion of ethics in AI. Although they vary by location, it is important to establish a regulatory framework for AI use in IR that accounts for existing regulations. For example, U.S. researchers can refer to the latest Executive Order on AI (White House, 2025), while their counterparts in the European Union should consider the GDPR (European Parliament and EU Council, 2016).

However, 55% of respondents in AIR’s survey caution that the rapid evolution of AI technologies introduces substantial risks, often outpacing current regulatory measures. Additionally, concerns extend to the adequacy of regulatory institutions themselves. For instance, in the biomedical sector—where debates on the ethics of AI are already well-established—critics note that Institutional Review Boards (IRBs) in the United States focus primarily on risks to individual human subjects rather than broader societal impacts. Bernstein et al. (2021) emphasize that although there have been calls to implement regulated ethics reviews for AI research, few organizations currently enforce such requirements. This illustrates a pressing need for updated, comprehensive regulatory approaches that address both individual and societal risks.

Ultimately, there is an ongoing debate about the ethical concerns of AI in IR in terms of accuracy, appropriateness, and privacy. Many higher education institutions are exploring the development of policies or guidelines, so IR professionals should be ready to engage with colleagues in these activities. To set our sails toward progress, we need to be actively involved in campus discussions, especially as the emerging growth of regulatory methods attempt to catch up with the rise of new technologies in time to address these inherent ethical concerns.

Magnetic Anomalies: Data Bias and AI System Reliance

The efficacy and accuracy of AI systems in IR rely on the quality, thoroughness, and impartiality of the data analyzed. Skewed, incomplete, or unrepresentative datasets compromise effectiveness, making AI a compass with disrupted magnetic interruptions; this means that instead of providing accurate guidance, it risks leading institutions off-course. Misrepresentation often results from a lack of diversity in training data or inappropriate generalization of findings, leading to erroneous conclusions. For instance, predictive models using pre-COVID-19 data may not account for pandemic-induced shifts, potentially leading to biased decisions. Historical data can perpetuate existing inequalities, such as admissions algorithms favoring traditionally resource-rich groups, thus reinforcing systemic disparities (Urmeneta, 2023).

Biased or incomplete AI datasets lead to discriminatory outcomes beyond technical inaccuracies. These biases arise from flaws in algorithms and datasets, resulting in unfair decisions based on irrelevant factors like race or religion. For example, AI in higher education may exclude applicants from specific high schools or areas due to historical dropout rates, putting qualified candidates at a disadvantage (Ivanov, 2023). Data misuse perpetuates systemic inequities, widening social gaps instead of promoting fairness. Biased data further compromises the reliability of AI, hindering equitable decision-making. As a result, such data integrity lapses reduce institutional trust and pose significant risks to higher education fairness, ultimately undermining these systems’ intended goals (Jafari & Keykha, 2023).

Addressing these issues would require rigorous data validation and ethical oversight to ensure that datasets accurately represent diverse populations and to prevent reliance on incomplete information (Siminto et al., 2023). Data quality and bias reduction can be achieved through the combination of multiple sources of data and regular cleaning procedures (Galgotia & Lakshmi, 2021). Furthermore, ethical frameworks prioritizing transparency, accountability, and fairness align AI systems with institutional values (Jafari & Keykha, 2023), while human involvement in decision-making prevents unintended harm and ensures equitable outcomes (Ivanov, 2023).

The Compass Without Context: Limitations in Nuance and Cultural Awareness

As with the aforementioned issue of data bias and system reliance, another critical aspect that limits the use of AI in IR is the lack of inherent understanding of the cultural and socio-political contexts from which the data originates—much like using a compass without considering the surroundings. Several studies have shown the absence of contextual awareness inherent in AI, resulting in outputs that overlook or misinterpret critical subtleties, particularly on how algorithms tend to embed values and social practices from where such algorithms are conceived (Mohamed et al., 2020; Prabhakaran et al., 2022), and reinforcing existing inequalities.

In another study, racial inequities were observed in predicting community college student success reinforced by algorithmic bias (Bird et al., 2024). Nyaaba et al. (2024) further discussed how AI models trained on Western data can inadvertently impose ideologies on non-Western educational settings that can further marginalize indigenous knowledge and practices.

This limitation proliferated by AI’s inability to consider the complex lived experiences of individuals of non-Western backgrounds can potentially impact conflicting decisions on an institution’s actions, especially in promoting diversity and inclusion in terms of enrollment strategies, analyzing attrition rates, and projecting student success.

Terenzini’s (1993, 2003) classical theory on Organizational Tiers of Intelligence can be considered as we enter the world of AI in IR in higher education. Although the use of AI is highly advantageous in assisting technical and analytical functions of IR (Tier 1), there is an imperative of human interpretation to effectively and accurately harness its use. Human oversight is essential to ensure that AI outputs are relevant and reflective of the context they aim to dissect.

Terenzini posits contextual intelligence (Tier 3), as the summit of an institutional researcher’s competencies for providing evidence-based decision-making actions. It is imperative to use contextual intelligence when evaluating AI-derived results of data analysis. This ensures that cultural and contextual sensitivities are still safeguarded despite the leveraging of AI. After all, AI is just the compass that guides the navigator, and it is the navigator—in this case, the institutional researcher—who chooses the most accurate path to arrive safely at the destination.

Navigational Hazards: Over-Reliance and the Skills Gap

The increasing reliance on AI in decision-making processes poses significant risks when outputs are accepted without critical evaluation. Such reliance can profoundly impact ethical decision-making, both individually and societally (Poszler & Lange, 2024). The lack of human deliberation on these ethically complex issues risks undermining nuanced, context-sensitive judgments (established in preceding discussions), replacing them with algorithmic efficiency that may not fully consider broader moral implications.

AI offers fast, optimal solutions that can encourage users to prioritize efficiency over practical, context-sensitive reasoning. This can lead to errors, as users favor AI-generated shortcuts that may overlook complex ethical considerations (Zhai et al., 2024). In this context, IR professionals must exercise caution when integrating AI tools into their workflows. Transparency about the limitations of these tools is essential to ensure decision-makers remain aware of their instrumental nature. AI should be positioned as a valuable resource, offering one component of a broader decision-making process, rather than a definitive solution.

Currently, the integration of AI tools in IR highlights a possible skills gap among professionals. This underscores the importance of training and capacity building for effective and ethical use of these technologies (Malik et al., 2024). Faculty and staff in higher education must adapt by acquiring competencies that support the responsible application of AI, as recognized by researchers who point to a lack of experience in AI within academic settings in certain countries (Universidad Santo Tomás et al., 2024).

Zheng and Hoolsema (2024) emphasize that now is a pivotal moment for IR professionals to embrace AI and generative AI tools, as failing to enhance skill sets could restrict their contribution to campus analytics efforts, given AI’s rapid adoption and potential to transform operations. AIR (2024) suggests that the use of AI demands proficiency in programming skills, such as SQL for managing databases, Python or R for data preparation, and collaboration with IT teams to develop robust data resources that meet institutional needs.

Integrating AI into institutional research workflows requires a collaborative and interdisciplinary approach. Collaboration should extend across academic disciplines and administrative areas. For instance, the need for partnerships between IR professionals and IT departments to develop robust data resources and analytics capabilities exemplifies the value of partnerships among experts with diverse skills (Deom et al., 2023). A balanced approach is equally essential, involving a gradual and well-guided implementation of AI. This progressive strategy not only mitigates risks but also builds confidence within institutions. To reiterate, AI is a compass, and only with proper competencies of the navigator, along with the manpower and cooperation of the sailors, can the ship make its journey.

The integration of AI into IR presents both opportunities and profound challenges. We are excited about AI’s potential to enhance decision-making and operational efficiency, but its limitations—including ethical dilemmas, bias, cultural and contextual insensitivity, and over-reliance—highlight the need for cautious and informed implementation. IR professionals have an important role to play. As AI continues to evolve, institution officials must prioritize transparency, ethical oversight, and human expertise to mitigate risks and uphold equity and inclusivity. Ultimately, navigating these challenges requires a collaborative effort, with IR professionals, policymakers, and technologists working together to harness AI’s benefits while safeguarding its alignment with institutional values and societal responsibilities. With these, the journey forward is one of balance, vigilance, and ethical commitment.

Roi Christian James Avila is a graduate student from the Philippines specializing in Institutional Research with a Master’s in Research and Innovation in Higher Education, under an Erasmus Mundus program. His academic pursuits explore the intersections

of sustainability, disaster management, and transformative pedagogy in shaping the future of higher education.

Roi Christian James Avila is a graduate student from the Philippines specializing in Institutional Research with a Master’s in Research and Innovation in Higher Education, under an Erasmus Mundus program. His academic pursuits explore the intersections

of sustainability, disaster management, and transformative pedagogy in shaping the future of higher education.

Laura Elizabeth Chinde is an Ecuadorian member of the Erasmus Mundus Master in Research and Innovation in Higher Education (MARIHE) program, specializing in institutional research. She holds a dual degree in Mass Communication and English Language

and Literature from Korea University and is currently conducting research on the impact of Think Tanks in educational policymaking.

Laura Elizabeth Chinde is an Ecuadorian member of the Erasmus Mundus Master in Research and Innovation in Higher Education (MARIHE) program, specializing in institutional research. She holds a dual degree in Mass Communication and English Language

and Literature from Korea University and is currently conducting research on the impact of Think Tanks in educational policymaking.

Lourdes Chininin, from Peru, is currently pursuing a Master’s in Research and Innovation in Higher Education with the support of an Erasmus Mundus Scholarship. She holds a law degree and is also a graduate of a Master’s program in Administrative

Law and Market Regulation. With professional experience in public service regulation, her work has focused particularly on quality assurance in higher education. Her research interests center on the intersection of public policy and regulation in

higher education.

Lourdes Chininin, from Peru, is currently pursuing a Master’s in Research and Innovation in Higher Education with the support of an Erasmus Mundus Scholarship. She holds a law degree and is also a graduate of a Master’s program in Administrative

Law and Market Regulation. With professional experience in public service regulation, her work has focused particularly on quality assurance in higher education. Her research interests center on the intersection of public policy and regulation in

higher education.

Rachael Kipkoech is a graduate student currently pursuing a Masters in Research and Innovation in Higher Education (MARIHE). She also holds a Bachelors of Laws from Kabarak Law school and she is passionate about contextualising learning in Africa.

Rachael Kipkoech is a graduate student currently pursuing a Masters in Research and Innovation in Higher Education (MARIHE). She also holds a Bachelors of Laws from Kabarak Law school and she is passionate about contextualising learning in Africa.

Zoe Maldonado Velez

is an Argentinian biologist and biology teacher specializing in public policy. With over four years of experience as a manager in higher education institutions, she is currently pursuing an Erasmus Mundus Master in Research and Innovation in Higher Education (MARIHE), focusing on institutional research. Her thesis explores the methods and role of education observatories in a data-driven context.

Zoe Maldonado Velez

is an Argentinian biologist and biology teacher specializing in public policy. With over four years of experience as a manager in higher education institutions, she is currently pursuing an Erasmus Mundus Master in Research and Innovation in Higher Education (MARIHE), focusing on institutional research. Her thesis explores the methods and role of education observatories in a data-driven context.

References

Association for Institutional Research. (2019). Statement of ethical principles. Retrieved from https://www.airweb.org/ir-data-professional-overview/statement-of-ethical-principles

Association for Institutional Research. (2023). Learning Analytics and Learning Outcome Assessment: A Viable Partnership. https://www.airweb.org/article/2023/08/15/learning-analytics-and-learning-outcome-assessment-a-viable-partnership

Association for Institutional Research. (2024). Developing Data Science and Advanced Analytics Capabilities to Expand IR/IE Office. Portfolios. https://www.airweb.org/article/2024/11/25/developing-data-science-and-advanced-analytics-capabilities-to-expand-ir-ie-office-portfolios

Bernstein, M. S., Levi, M., Magnus, D., Rajala, B., Satz, D., & Waeiss, C. (2021). ESR: Ethics and Society Review of Artificial Intelligence Research. arXiv. https://doi.org/10.48550/arXiv.2106.11521

Bird, K. A., Castleman, B. L., & Song, Y. (2024). Are algorithms biased in education? Exploring racial bias in predicting community college student success. Journal of Policy Analysis and Management, pam.22569. https://doi.org/10.1002/pam.22569

European Parliament and the Council of the European Union. (2016). Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation). Official Journal of the European Union. https://eur-lex.europa.eu/eli/reg/2016/679/oj

Malik, A., Khan, M. L., Hussain, K., Qadir, J., & Tarhini, A. (2024). AI in higher education: Unveiling academicians’ perspectives on teaching, research, and ethics in the age of ChatGPT. Interactive Learning Environments, 1–17. https://doi.org/10.1080/10494820.2024.2409407

Mohamed, S., Png, M.-T., & Isaac, W. (2020). Decolonial AI: Decolonial Theory as Sociotechnical Foresight in Artificial Intelligence. Philosophy & Technology, 33(4), 659–684. https://doi.org/10.1007/s13347-020-00405-8

Poszler, F., & Lange, B. (2024). The impact of intelligent decision-support systems on humans’ ethical decision-making: A systematic literature review and an integrated framework. Technological Forecasting and Social Change, 204, 123403. https://doi.org/10.1016/j.techfore.2024.123403

Prabhakaran, V., Qadri, R., & Hutchinson, B. (2022). Cultural Incongruencies in Artificial Intelligence (Version 1). arXiv. https://doi.org/10.48550/ARXIV.2211.13069

Universidad Santo Tomás, Ramírez Téllez, A., Fonseca Ortiz, L. M., Universidad Santo Tomás, Triana, F. C., & Universidad Santo Tomás. (2024). Inteligencia artificial en la administración universitaria: Una visión general de sus usos y aplicaciones. Revista Interamericana de Bibliotecología, 47(2). https://doi.org/10.17533/udea.rib.v47n2e353620

White House (2025). Fact Sheet: President Donald J. Trump Takes Action to Enhance America’s AI. Retrieved from: https://www.whitehouse.gov/fact-sheets/2025/01/fact-sheet-president-donald-j-trump-takes-action-to-enhance-americas-ai-leadership/#:~:text=REMOVING%20BARRIERS%20TO%20AMERICAN%20AI,enhancing%20America's%20global%20AI%20dominance

Zhai, C., Wibowo, S., & Li, L. D. (2024). The effects of over-reliance on AI dialogue systems on students’ cognitive abilities: A systematic review. Smart Learning Environments, 11(1), 28. https://doi.org/10.1186/s40561-024-00316-7

Zheng, H. & Hoolsema, M. (2024). Developing Data Science and Advanced Analytics Capabilities to Expand IR/IE Office. Portfolios. https://www.airweb.org/article/2024/11/25/developing-data-science-and-advanced-analytics-capabilities-to-expand-ir-ie-office-portfolios

Zheng, H., & Webber, K. (2023). AI in higher education: Implications for institutional research. Carnegie Mellon University. Retrieved from https://www.airweb.org/article/2023/03/30/ai-in-higher-education-implications-for-institutional-research